Principal Component Analysis an aproach

This post will be commit until the end of month

Principle Component Analysis (PCA) for Data Visualization

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.decomposition import PCA

from sklearn.preprocessing import StandardScaler

%matplotlib inline

plt.style.use('seaborn')

Load Iris Dataset

url = "https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data"

# loading dataset into Pandas DataFrame

df = pd.read_csv(url

, names=['sepal length','sepal width','petal length','petal width','target'])

df.head()

| sepal length | sepal width | petal length | petal width | target | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | Iris-setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | Iris-setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | Iris-setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | Iris-setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | Iris-setosa |

Standardize the Data

Since PCA yields a feature subspace that maximizes the variance along the axes, it makes sense to standardize the data, especially, if it was measured on different scales. Although, all features in the Iris dataset were measured in centimeters, let us continue with the transformation of the data onto unit scale (mean=0 and variance=1), which is a requirement for the optimal performance of many machine learning algorithms.

features = ['sepal length', 'sepal width', 'petal length', 'petal width']

x = df.loc[:, features].values

y = df.loc[:,['target']].values

x = StandardScaler().fit_transform(x)

pd.DataFrame(data = x, columns = features).head()

| sepal length | sepal width | petal length | petal width | |

|---|---|---|---|---|

| 0 | -0.900681 | 1.032057 | -1.341272 | -1.312977 |

| 1 | -1.143017 | -0.124958 | -1.341272 | -1.312977 |

| 2 | -1.385353 | 0.337848 | -1.398138 | -1.312977 |

| 3 | -1.506521 | 0.106445 | -1.284407 | -1.312977 |

| 4 | -1.021849 | 1.263460 | -1.341272 | -1.312977 |

PCA Projection to 2D

pca = PCA(n_components=2)

principalComponents = pca.fit_transform(x)

principalDf = pd.DataFrame(data = principalComponents

, columns = ['principal component 1', 'principal component 2'])

principalDf.head(5)

| principal component 1 | principal component 2 | |

|---|---|---|

| 0 | -2.264542 | 0.505704 |

| 1 | -2.086426 | -0.655405 |

| 2 | -2.367950 | -0.318477 |

| 3 | -2.304197 | -0.575368 |

| 4 | -2.388777 | 0.674767 |

df[['target']].head()

| target | |

|---|---|

| 0 | Iris-setosa |

| 1 | Iris-setosa |

| 2 | Iris-setosa |

| 3 | Iris-setosa |

| 4 | Iris-setosa |

finalDf = pd.concat([principalDf, df[['target']]], axis = 1)

finalDf.head(5)

| principal component 1 | principal component 2 | target | |

|---|---|---|---|

| 0 | -2.264542 | 0.505704 | Iris-setosa |

| 1 | -2.086426 | -0.655405 | Iris-setosa |

| 2 | -2.367950 | -0.318477 | Iris-setosa |

| 3 | -2.304197 | -0.575368 | Iris-setosa |

| 4 | -2.388777 | 0.674767 | Iris-setosa |

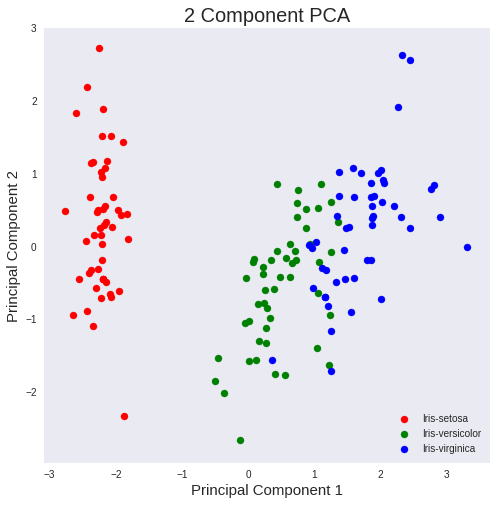

Visualize 2D Projection

Use a PCA projection to 2d to visualize the entire data set. You should plot different classes using different colors or shapes. Do the classes seem well-separated from each other?

fig = plt.figure(figsize = (8,8))

ax = fig.add_subplot(1,1,1)

ax.set_xlabel('Principal Component 1', fontsize = 15)

ax.set_ylabel('Principal Component 2', fontsize = 15)

ax.set_title('2 Component PCA', fontsize = 20)

targets = ['Iris-setosa', 'Iris-versicolor', 'Iris-virginica']

colors = ['r', 'g', 'b']

for target, color in zip(targets,colors):

indicesToKeep = finalDf['target'] == target

ax.scatter(finalDf.loc[indicesToKeep, 'principal component 1']

, finalDf.loc[indicesToKeep, 'principal component 2']

, c = color

, s = 50)

ax.legend(targets)

ax.grid()

The three classes appear to be well separated!

iris-virginica and iris-versicolor could be better separated, but still good!

Explained Variance

The explained variance tells us how much information (variance) can be attributed to each of the principal components.

pca.explained_variance_ratio_

array([0.72770452, 0.23030523])

Together, the first two principal components contain 95.80% of the information. The first principal component contains 72.77% of the variance and the second principal component contains 23.03% of the variance. The third and fourth principal component contained the rest of the variance of the dataset.

What are other applications of PCA (other than visualizing data)?

If your learning algorithm is too slow because the input dimension is too high, then using PCA to speed it up is a reasonable choice. (most common application in my opinion). We will see this in the MNIST dataset.

If memory or disk space is limited, PCA allows you to save space in exchange for losing a little of the data’s information. This can be a reasonable tradeoff.

What are the limitations of PCA?

- PCA is not scale invariant. check: we need to scale our data first.

- The directions with largest variance are assumed to be of the most interest

- Only considers orthogonal transformations (rotations) of the original variables

- PCA is only based on the mean vector and covariance matrix. Some distributions (multivariate normal) are characterized by this, but some are not.

- If the variables are correlated, PCA can achieve dimension reduction. If not, PCA just orders them according to their variances.